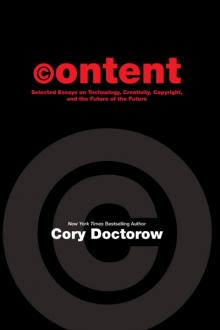

Content by Cory Doctorow (first e reader txt) 📕

And so it has been for the last 13 years. The companies that claim the ability to regulate humanity's Right to Know have been tireless in their endeavors to prevent the inevitable. The won most of the legislative battles in the U.S. and abroad, having purchased all the government money could buy. They even won most of the contests in court. They created digital rights management software schemes that behaved rather like computer viruses.

Indeed, they did about everything they could short of seriously examining the actual economics of the situation - it has never been proven to me that illegal downloads are more like shoplifted goods than viral marketing - or trying to come up with a business model that the market might embrace.

Had it been left to the stewardship of the usual suspects, there would scarcely be a word or a note online that you didn't have to pay to experience. There would be increasingly little free speech or any consequence, since free speech is not something anyone can o

Read free book «Content by Cory Doctorow (first e reader txt) 📕» - read online or download for free at americanlibrarybooks.com

- Author: Cory Doctorow

- Performer: 1892391813

Read book online «Content by Cory Doctorow (first e reader txt) 📕». Author - Cory Doctorow

Lapsarianism — the idea of a paradise lost, a fall from grace that makes each year worse than the last — is the predominant future feeling for many people. It’s easy to see why: an imperfectly remembered golden childhood gives way to the worries of adulthood and physical senescence. Surely the world is getting worse: nothing tastes as good as it did when we were six, everything hurts all the time, and our matured gonads drive us into frenzies of bizarre, self-destructive behavior.

Lapsarianism dominates the Abrahamic faiths. I have an Orthodox Jewish friend whose tradition holds that each generation of rabbis is necessarily less perfect than the rabbis that came before, since each generation is more removed from the perfection of the Garden. Therefore, no rabbi is allowed to overturn any of his forebears’ wisdom, since they are all, by definition, smarter than him.

The natural endpoint of Lapsarianism is apocalypse. If things get worse, and worse, and worse, eventually they’ll just run out of worseness. Eventually, they’ll bottom out, a kind of rotten death of the universe when Lapsarian entropy hits the nadir and takes us all with it.

Running counter to Lapsarianism is progressivism: the Enlightenment ideal of a world of great people standing on the shoulders of giants. Each of us contributes to improving the world’s storehouse of knowledge (and thus its capacity for bringing joy to all of us), and our descendants and proteges take our work and improve on it. The very idea of “progress” runs counter to the idea of Lapsarianism and the fall: it is the idea that we, as a species, are falling in reverse, combing back the wild tangle of entropy into a neat, tidy braid.

Of course, progress must also have a boundary condition — if only because we eventually run out of imaginary ways that the human condition can improve. And science fiction has a name for the upper bound of progress, a name for the progressive apocalypse:

We call it the Singularity.

Vernor Vinge’s Singularity takes place when our technology reaches a stage that allows us to “upload” our minds into software, run them at faster, hotter speeds than our neurological wetware substrate allows for, and create multiple, parallel instances of ourselves. After the Singularity, nothing is predictable because everything is possible. We will cease to be human and become (as the title of Rudy Rucker’s next novel would have it) Postsingular.

The Singularity is what happens when we have so much progress that we run out of progress. It’s the apocalypse that ends the human race in rapture and joy. Indeed, Ken MacLeod calls the Singularity “the rapture of the nerds,” an apt description for the mirror-world progressive version of the Lapsarian apocalypse.

At the end of the day, both progress and the fall from grace are illusions. The central thesis of Stumbling on Happiness is that human beings are remarkably bad at predicting what will make us happy. Our predictions are skewed by our imperfect memories and our capacity for filling the future with the present day.

The future is gnarlier than futurism. NCC-1701 probably wouldn’t send out transporter-equipped drones — instead, it would likely find itself on missions whose ethos, mores, and rationale are largely incomprehensible to us, and so obvious to its crew that they couldn’t hope to explain them.

Science fiction is the literature of the present, and the present is the only era that we can hope to understand, because it’s the only era that lets us check our observations and predictions against reality.

$$$$

When the Singularity is More Than a Literary Device: An Interview with Futurist-Inventor Ray Kurzweil

(Originally published in Asimov’s Science Fiction Magazine, June 2005)

It’s not clear to me whether the Singularity is a technical belief system or a spiritual one.

The Singularity — a notion that’s crept into a lot of skiffy, and whose most articulate in-genre spokesmodel is Vernor Vinge — describes the black hole in history that will be created at the moment when human intelligence can be digitized. When the speed and scope of our cognition is hitched to the price-performance curve of microprocessors, our “progress” will double every eighteen months, and then every twelve months, and then every ten, and eventually, every five seconds.

Singularities are, literally, holes in space from whence no information can emerge, and so SF writers occasionally mutter about how hard it is to tell a story set after the information Singularity. Everything will be different. What it means to be human will be so different that what it means to be in danger, or happy, or sad, or any of the other elements that make up the squeeze-and-release tension in a good yarn will be unrecognizable to us pre-Singletons.

It’s a neat conceit to write around. I’ve committed Singularity a couple of times, usually in collaboration with gonzo Singleton Charlie Stross, the mad antipope of the Singularity. But those stories have the same relation to futurism as romance novels do to love: a shared jumping-off point, but radically different morphologies.

Of course, the Singularity isn’t just a conceit for noodling with in the pages of the pulps: it’s the subject of serious-minded punditry, futurism, and even science.

Ray Kurzweil is one such pundit-futurist-scientist. He’s a serial entrepreneur who founded successful businesses that advanced the fields of optical character recognition (machine-reading) software, text-to-speech synthesis, synthetic musical instrument simulation, computer-based speech recognition, and stock-market analysis. He cured his own Type-II diabetes through a careful review of the literature and the judicious application of first principles and reason. To a casual observer, Kurzweil appears to be the star of some kind of Heinlein novel, stealing fire from the gods and embarking on a quest to bring his maverick ideas to the public despite the dismissals of the establishment, getting rich in the process.

Kurzweil believes in the Singularity. In his 1990 manifesto, “The Age of Intelligent Machines,” Kurzweil persuasively argued that we were on the brink of meaningful machine intelligence. A decade later, he continued the argument in a book called The Age of Spiritual Machines, whose most audacious claim is that the world’s computational capacity has been slowly doubling since the crust first cooled (and before!), and that the doubling interval has been growing shorter and shorter with each passing year, so that now we see it reflected in the computer industry’s Moore’s Law, which predicts that microprocessors will get twice as powerful for half the cost about every eighteen months. The breathtaking sweep of this trend has an obvious conclusion: computers more powerful than people; more powerful than we can comprehend.

Now Kurzweil has published two more books, The Singularity Is Near, When Humans Transcend Biology (Viking, Spring 2005) and Fantastic Voyage: Live Long Enough to Live Forever (with Terry Grossman, Rodale, November 2004). The former is a technological roadmap for creating the conditions necessary for ascent into Singularity; the latter is a book about life-prolonging technologies that will assist baby-boomers in living long enough to see the day when technological immortality is achieved.

See what I meant about his being a Heinlein hero?

I still don’t know if the Singularity is a spiritual or a technological belief system. It has all the trappings of spirituality, to be sure. If you are pure and kosher, if you live right and if your society is just, then you will live to see a moment of Rapture when your flesh will slough away leaving nothing behind but your ka, your soul, your consciousness, to ascend to an immortal and pure state.

I wrote a novel called Down and Out in the Magic Kingdom where characters could make backups of themselves and recover from them if something bad happened, like catching a cold or being assassinated. It raises a lot of existential questions: most prominently: are you still you when you’ve been restored from backup?

The traditional AI answer is the Turing Test, invented by Alan Turing, the gay pioneer of cryptography and artificial intelligence who was forced by the British government to take hormone treatments to “cure” him of his homosexuality, culminating in his suicide in 1954. Turing cut through the existentialism about measuring whether a machine is intelligent by proposing a parlor game: a computer sits behind a locked door with a chat program, and a person sits behind another locked door with his own chat program, and they both try to convince a judge that they are real people. If the computer fools a human judge into thinking that it’s a person, then to all intents and purposes, it’s a person.

So how do you know if the backed-up you that you’ve restored into a new body — or a jar with a speaker attached to it — is really you? Well, you can ask it some questions, and if it answers the same way that you do, you’re talking to a faithful copy of yourself.

Sounds good. But the me who sent his first story into Asimov’s seventeen years ago couldn’t answer the question, “Write a story for Asimov’s” the same way the me of today could. Does that mean I’m not me anymore?

Kurzweil has the answer.

“If you follow that logic, then if you were to take me ten years ago, I could not pass for myself in a Ray Kurzweil Turing Test. But once the requisite uploading technology becomes available a few decades hence, you could make a perfect-enough copy of me, and it would pass the Ray Kurzweil Turing Test. The copy doesn’t have to match the quantum state of my every neuron, either: if you meet me the next day, I’d pass the Ray Kurzweil Turing Test. Nevertheless, none of the quantum states in my brain would be the same. There are quite a few changes that each of us undergo from day to day, we don’t examine the assumption that we are the same person closely.

“We gradually change our pattern of atoms and neurons but we very rapidly change the particles the pattern is made up of. We used to think that in the brain — the physical part of us most closely associated with our identity — cells change very slowly, but it turns out that the components of the neurons, the tubules and so forth, turn over in only days. I’m a completely different set of particles from what I was a week ago.

“Consciousness is a difficult subject, and I’m always surprised by how many people talk about consciousness routinely as if it could be easily and readily tested scientifically. But we can’t postulate a consciousness detector that does not have some assumptions about consciousness built into it.

“Science is about objective third party observations and logical deductions from them. Consciousness is about first-person, subjective experience, and there’s a fundamental gap there. We live in a world of assumptions about consciousness. We share the assumption that other human beings are conscious, for example. But that breaks down when we go outside of humans, when we consider, for example, animals. Some say only humans are conscious and animals are instinctive and machinelike. Others see humanlike behavior in an animal and consider the animal conscious, but even these observers don’t generally attribute consciousness to animals that aren’t humanlike.

“When machines are complex enough to have responses recognizable as emotions, those machines will be more humanlike than animals.”

The Kurzweil Singularity goes like this: computers get better and smaller. Our ability to measure the world gains precision and grows ever cheaper. Eventually, we can measure the world inside the brain and make a copy of it in a computer that’s as fast and complex as a brain, and voila, intelligence.

Here in the twenty-first century we like to view ourselves as ambulatory brains, plugged into meat-puppets that lug our precious grey matter from place to place. We tend to think of that grey matter

Comments (0)